I work at Shanghai AI Lab as a researcher now in Shanghai, doing some fundamental pretrained-language-model-related research. I am now on large language model, long-context reasoning and agent-driven data synthesis research. If you are seeking any form of academic cooperation, please feel free to email me at chenzhi@pjlab.org.cn. I graduated from Shanghai Jiao Tong University (上海交通大学) with a Ph.D’s degree, advised by Kai Yu (俞凯) and Lu Chen (陈露).

My research interest includes large language model, long-context reasoning and reinforcement learning. I have published more than 20 papers at the top international NLP conferences such as ACL, EMNLP, NAACL, AAAI, ICML and the top international NLP journals such as TACL and TSALP.

🔥 News

- 2024.06: One paper is accepted by ACL 2024!

- 2024.05: One paper is accepted by ICML 2024!

- 2024.03: Technical report of InternLM2 has been released, welcome to InternLM2 for more details.

- 2023.10: One demo paper (Chinese LLM Evaluation Platform) is accepted by EMNLP 2023!

- 2023.03: I join Shanghai AI Lab as a researcher!

- 2023.02: One paper is accepted by NCMMSC ( Best Paper Award )!

- 2023.01: One journal paper is accepted by TACL!

- 2022.12: One paper is accepted by EMNLP 2022!

📝 Publications

🎙 Journal Papers

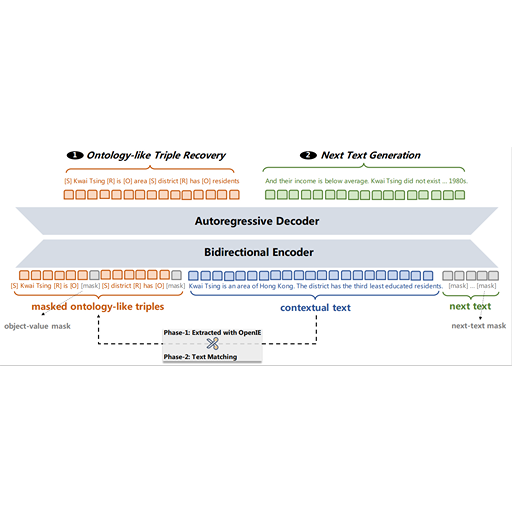

OPAL: Ontology-Aware Pretrained Language Model for End-to-End Task-Oriented Dialogue

Zhi Chen, Yuncong Liu, Lu Chen, Su Zhu, Mengyue Wu and Kai Yu

- OPAL is the first pretrained language model for end-to-end task-oriented dialogue (TOD). Unlike chit-chat dialogue models, task-oriented dialogue models fulfill at least two task-specific modules: dialogue state tracker (DST) and response generator (RG). The dialogue state consists of the domain-slot-value triples, which are regarded as the user’s constraints to search the domain-related databases. To bridge the gap between the pretraining method and downstream tasks, we design two pretraining tasks: ontology-like triple recovery and next-text generation, which simulates the DST and RG, respectively.

TASLPDistributed Structured Actor-Critic Reinforcement Learning for Universal Dialogue Management, Zhi Chen, Lu Chen, Xiaoyuan Liu and Kai YuTASLPAgentgraph: Toward Universal Dialogue Management with Structured Deep Reinforcement Learning, Lu Chen, Zhi Chen, Bowen Tan, Sishan Long, Milica Gasic and Kai YuApplied SciencesDIR: A Large-Scale Dialogue Rewrite Dataset for Cross-Domain Conversational Text-to-SQL, Jieyu Li, Zhi Chen, Lu Chen, Zichen Zhu, Hanqi Li, Ruisheng Cao and Kai YuApplied SciencesRelation-Aware Graph Transformer for SQL-to-Text Generation, Da Ma, Xingyu Chen, Ruisheng Cao, Zhi Chen, Lu Chen and Kai Yu

👄 Conference Papers

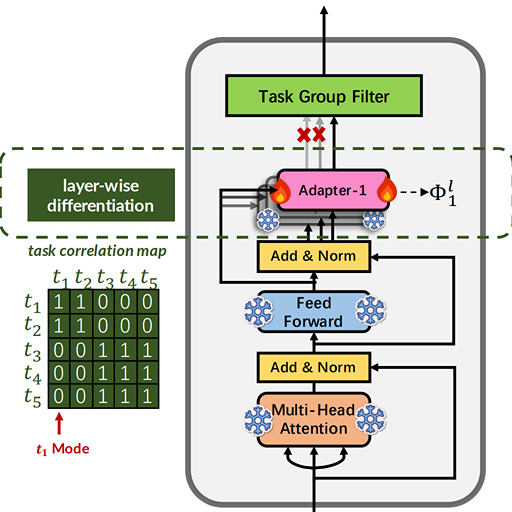

AdapterShare: Task Correlation Modeling with Adapter Differentiation

Zhi Chen, Bei Chen, Lu Chen, Kai Yu and Jian-Guang Lou

- Current MTL methods pay more attention to task selection or model design to fuse as much knowledge as possible, while the intrinsic task correlation is often neglected. It is important to learn sharing strategies among multiple tasks rather than sharing everything. AdapterShare leverages an adapter differentiation method to explicitly model task correlation among multiple tasks. AdapterShare is automatically learned based on the gradients on tiny held-out validation data.

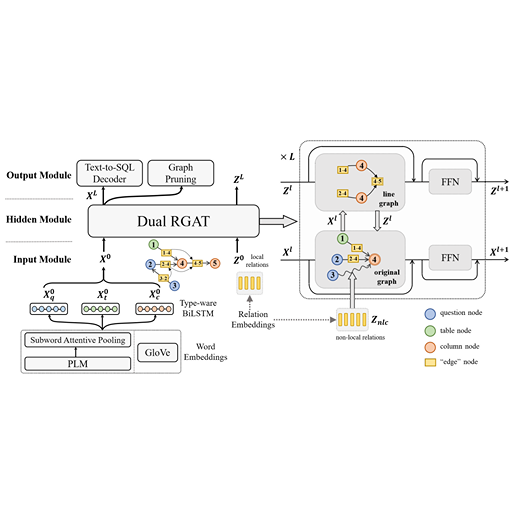

LGESQL: Line Graph Enhanced Text-to-SQL Model with Mixed Local and Non-Local Relations

Ruisheng Cao, Lu Chen, Zhi Chen, Yanbin Zhao, Su Zhu and Kai Yu

- Line Graph Enhanced Text-toSQL (LGESQL) model mines the underlying relational features without constructing metapaths. By virtue of the line graph, messages propagate more efficiently through not only connections between nodes, but also the topology of directed edges. Furthermore, both local and non-local relations are integrated distinctively during the graph iteration.

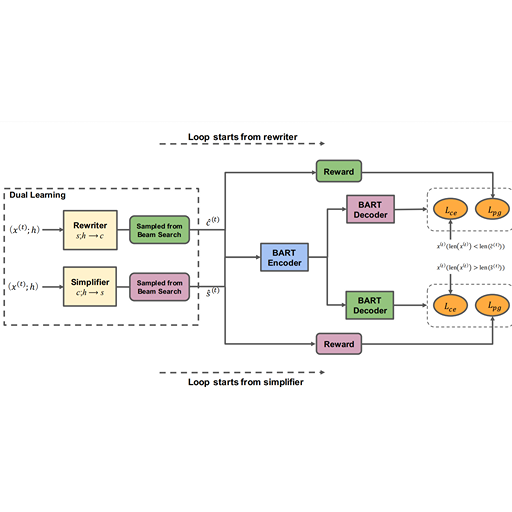

Decoupled Dialogue Modeling and Semantic Parsing for Multi-Turn Text-to-SQL

Zhi Chen, Lu Chen, Li Hanqi, Ruisheng Cao, Da Ma, Mengyue Wu and Kai Yu

- Decoupled multi-turn Text-to-SQL framework has two models, where an utterance rewrite model first explicitly solves completion of dialogue context, and then a single-turn Text-to-SQL parser follows. A dual learning approach is also proposed for the utterance rewrite model to address the data sparsity problem. Compared with end-to-end approaches, the proposed decoupled method can achieve excellent performance without any annotated in-domain data.

SigDial 2022UniDU: Towards A Unified Generative Dialogue Understanding Framework, Zhi Chen, Lu Chen, Bei Chen, Libo Qin, Yuncong Liu, Su Zhu, Jian-Guang Lou and Kai YuNCMMSC 2022Dual Learning for Dialogue State Tracking, Zhi Chen, Lu Chen, Yanbin Zhao, Su Zhu, Kai YuNAACL 2021ShadowGNN: Graph Projection Neural Network for Text-to-SQL Parser, Zhi Chen, Lu Chen, Yanbin Zhao, Ruisheng Cao, Zihan Xu, Su Zhu and Kai YuNLPCC 2021Few-Shot NLU with Vector Projection Distance and Abstract Triangular CRF, Su Zhu, Lu Chen, Ruisheng Cao, Zhi Chen, Qingliang Miao, Kai YuAAAI 2020Semi-Supervised Text Simplification with Back-Translation and Asymmetric Denoising Autoencoders, Yanbin Zhao, Lu Chen, Zhi Chen and Kai YuACL 2020Line graph enhanced AMR-to-text generation with mix-order graph attention networks, Yanbin Zhao, Lu Chen, Zhi Chen, Ruisheng Cao, Su Zhu, Kai YuACL 2020Neural Graph Matching Networks for Chinese Short Text Matching, Lu Chen, Yanbin Zhao, Boer Lyu, Lesheng Jin, Zhi Chen, Su Zhu and Kai YuICASSP 2020Policy Adaptation for Deep Reinforcement Learning-based Dialogue Management, Lu Chen, Cheng Chang, Zhi Chen, Bowen Tan, Milica Gašić and Kai YuNLPCC 2020Memory Attention Neural Network for Multi-domain Dialogue State Tracking, Zihan Xu*, Zhi Chen*, Lu Chen, Su Zhu and Kai Yu

📖 Educations

- 2017.09 - 2023.03, Ph.D, Shanghai Jiao Tong Univeristy, Shanghai.

- 2013.06 - 2017.09, Bachelor, Northwestern Polytechnical University, Xi’an.

- 2010.09 - 2013.06, Tiancheng Middle School, Anqing.

💻 Internships

- 2022.08 - 2023.03, Shanghai AI Lab, OpenDialogLab, Shanghai.

- 2021.10 - 2022.06, MSRA, DKI Group, Beijing.